Tags

Tutorial: Using R and Twitter to Analyse Consumer Sentiment

Content

This year I have been working with a Singapore Actuarial Society working party to introduce Singaporean actuaries to big data applications, and the new techniques and tools they need in order to keep up with this technology. The working group’s presentation at the 2015 General Insurance Seminar was well received, and people want more. So we are going to run some training tutorials, and want to extend our work.

One of those extensions is text mining. Inspired by a CAS paper by Roosevelt C. Mosly Jnr, I thought that I’d try to create a simple working example of twitter text mining, using R. I thought that I could just Google for an R script, make some minor changes, and run it. If only it were as simple as that…

I quickly ran into problems that none of the on-line blogs and documentation fully deal with:

-

- Twitter changed its search API to require authorisation. That authorisation process is a bit time-consuming and even the most useful blogs got some minor but important details wrong.

- CRAN has withdrawn its sentiment package, meaning that I couldn’t access the key R library that makes the example interesting.

After much experimentation, and with the help of some R experts, I finally created a working example. Here it goes, step by step:

STEP 1: Log on to https://apps.twitter.com/

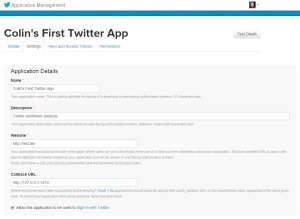

Just use your normal Twitter account login. The screen should look like this:

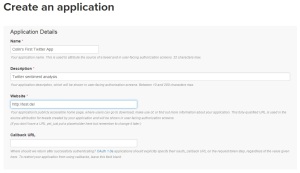

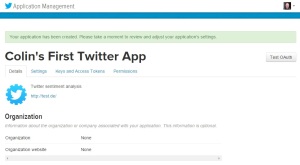

STEP 2: Create a New Twitter Application

Click on the “Create New App” button, then you will be asked to fill in the following form:

Choose your own application name, and your own application description. The website needs to be a valid URL. If you don’t have your own URL, then JULIANHI recommends that you use http://test.de/ , then scroll down the page.

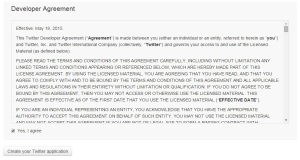

Click “Yes, I Agree” for the Developer Agreement, and then click the “Create your Twitter application” button. You will see something like this:

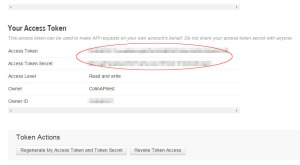

Go to the “Keys and Access Tokens” tab. Then look for the Consumer Key and the Consumer Secret. I have circled them in the image below. We will use these keys later in our R script, to authorise R to access the Twitter API.

Scroll down to the bottom of the page, where you will find the “Your Access Token” section.

Click on the button labelled “Create my access token”.

Look for the Access Token and Access Token Secret. We will use these in the next step, to authorise R to access the Twitter API.

STEP 3: Authorise R to Access Twitter

First we need to load the Twitter authorisation libraries. I like to use the pacman package to install and load my packages. The other packages we need are:

-

- twitteR : which gives an R interface to the Twitter API

- ROAuth : OAuth authentication to web servers

- RCurl : http requests and processing the results returned by a web server

The R script is below. But first remember to replace each “xxx” with the respective token or secret you obtained from the Twitter app page.

# authorisation

if (!require('pacman')) install.packages('pacman')

pacman::p_load(twitteR, ROAuth, RCurl)

api_key = 'xxx'

api_secret = 'xxx'

access_token = 'xxx'

access_token_secret = 'xxx'

# Set SSL certs globally

options(RCurlOptions = list(cainfo = system.file('CurlSSL', 'cacert.pem', package = 'RCurl')))

# set up the URLs

reqURL = 'https://api.twitter.com/oauth/request_token'

accessURL = 'https://api.twitter.com/oauth/access_token'

authURL = 'https://api.twitter.com/oauth/authorize'

twitCred = OAuthFactory$new(consumerKey = api_key, consumerSecret = api_secret, requestURL = reqURL, accessURL = accessURL, authURL = authURL)

twitCred$handshake(cainfo = system.file('CurlSSL', 'cacert.pem', package = 'RCurl'))

After substituting your own token and secrets for “xxx”, run the script. It will open a web page in your browser. Note that on some systems R can’t open the browser automatically, so you will have to copy the URL from R, open your browser, then paste the link into your browser. If R gives you any error messages, then check that you have pasted the token and secret strings correctly, and ensure that you have the latest versions of the twitteR, ROAuth and RCurl libraries by reinstalling them using the install.packages command.

The web page will look something like this:

Click the “Authorise app” button, and you will be given a PIN (note that your PIN will be different to the one in my example).

Copy this PIN to the clipboard and then return to R, which is asking you to enter the PIN.

Paste in, or type, the PIN from the Twitter web page, then click enter. R is now authorised to run Twitter searches. You only need to do this once, but you do need to use your token strings and secret strings again in your R search scripts.

Go back to https://apps.twitter.com/ and go to the “Setup” tab for your application.

For the Callback URL enter http://127.0.0.1:1410 . This will allow us the option of an alternative authorisation method later.

STEP 4: Install the Sentiment Package

Since the sentiment package is no longer available on CRAN, we have to download the archived source code and install it via this RScript:

if (!require('pacman')) install.packages('pacman&')

pacman::p_load(devtools, installr)

install.Rtools()

install_url('http://cran.r-project.org/src/contrib/Archive/Rstem/Rstem_0.4-1.tar.gz')

install_url('http://cran.r-project.org/src/contrib/Archive/sentiment/sentiment_0.2.tar.gz')

Note that we only have to download and install the sentiment package once.

UPDATE: There’s a new package on CRAN for sentiment analysis, and I have written a tutorial about it.

STEP 5: Create A Script to Search Twitter

Finally we can create a script to search twitter. The first step is to set up the authorisation credentials for your script. This requires the following packages:

- twitteR : which gives an R interface to the Twitter API

- sentiment : classifies the emotions of text

- plyr : for splitting text

- ggplot2 : for plots of the categorised results

- wordcloud : creates word clouds of the results

- RColorBrewer : colour schemes for the plots and wordcloud

- httpuv : required for the alternative web authorisation process

- RCurl : http requests and processing the results returned by a web server

if (!require('pacman')) install.packages('pacman')

pacman::p_load(twitteR, sentiment, plyr, ggplot2, wordcloud, RColorBrewer, httpuv, RCurl, base64enc)

options(RCurlOptions = list(cainfo = system.file('CurlSSL', 'cacert.pem', package = 'RCurl')))

api_key = 'xxx'

api_secret = 'xxx'

access_token = 'xxx'

access_token_secret = 'xxx'

setup_twitter_oauth(api_key,api_secret,access_token,access_token_secret)

Remember to replace the “xxx” strings with your token strings and secret strings.

Using the setup_twitter_oauth function with all four parameters avoids the case where R opens a web browser again. But I have found that it can be problematic to get this function to work on some computers. If you are having problems, then I suggest that you try the alternative call with just two parameters:

setup_twitter_oauth(api_key,api_secret)

This alternative way opens your browser and uses your login credentials from your current Twitter session.

Once authorisation is complete, we can run a search. For this example, I am doing a search on tweets mentioning a well-known brand: Starbucks. I am restricting the results to tweets written in English, and I am getting a sample of 10,000 tweets. It is also possible to give date range and geographic restrictions.

# harvest some tweets

some_tweets = searchTwitter('starbucks', n=10000, lang='en')

# get the text

some_txt = sapply(some_tweets, function(x) x$getText())

Please note that the Twitter search API does not return an exhaustive list of tweets that match your search criteria, as Twitter only makes available a sample of recent tweets. For a more comprehensive search, you will need to use the Twitter streaming API, creating a database of results and regularly updating them, or use an online service that can do this for you.

Now that we have tweet texts, we need to clean them up before doing any analysis. This involves removing content, such as punctuation, that has no emotional content, and removing any content that causes errors.

# remove retweet entities

some_txt = gsub('(RT|via)((?:\\b\\W*@\\w+)+)', '', some_txt)

# remove at people

some_txt = gsub('@\\w+', '', some_txt)

# remove punctuation

some_txt = gsub('[[:punct:]]', '', some_txt)

# remove numbers

some_txt = gsub('[[:digit:]]', '', some_txt)

# remove html links

some_txt = gsub('http\\w+', '', some_txt)

# remove unnecessary spaces

some_txt = gsub('[ \t]{2,}', '', some_txt)

some_txt = gsub('^\\s+|\\s+$', '', some_txt)

# define 'tolower error handling' function

try.error = function(x)

{

# create missing value

y = NA

# tryCatch error

try_error = tryCatch(tolower(x), error=function(e) e)

# if not an error

if (!inherits(try_error, 'error'))

y = tolower(x)

# result

return(y)

}

# lower case using try.error with sapply

some_txt = sapply(some_txt, try.error)

# remove NAs in some_txt

some_txt = some_txt[!is.na(some_txt)]

names(some_txt) = NULL

Now that we have clean text for analysis, we can do sentiment analysis. The classify_emotion function is from the sentiment package and “classifies the emotion (e.g. anger, disgust, fear, joy, sadness, surprise) of a set of texts using a naive Bayes classifier trained on Carlo Strapparava and Alessandro Valitutti’s emotions lexicon.”

# Perform Sentiment Analysis # classify emotion class_emo = classify_emotion(some_txt, algorithm='bayes', prior=1.0) # get emotion best fit emotion = class_emo[,7] # substitute NA's by 'unknown' emotion[is.na(emotion)] = 'unknown' # classify polarity class_pol = classify_polarity(some_txt, algorithm='bayes') # get polarity best fit polarity = class_pol[,4] # Create data frame with the results and obtain some general statistics # data frame with results sent_df = data.frame(text=some_txt, emotion=emotion, polarity=polarity, stringsAsFactors=FALSE) # sort data frame sent_df = within(sent_df, emotion <- factor(emotion, levels=names(sort(table(emotion), decreasing=TRUE))))

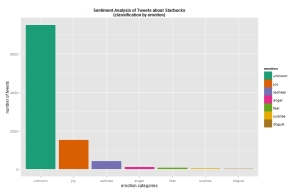

With the sentiment analysis done, we can start to look at the results. Let’s look at a histogram of the number of tweets with each emotion:

# Let’s do some plots of the obtained results

# plot distribution of emotions

ggplot(sent_df, aes(x=emotion)) +

geom_bar(aes(y=..count.., fill=emotion)) +

scale_fill_brewer(palette='Dark2') +

labs(x='emotion categories', y='number of tweets') +

ggtitle('Sentiment Analysis of Tweets about Starbucks\n(classification by emotion)') +

theme(plot.title = element_text(size=12, face='bold'))

Most of the tweets have unknown emotional content. But that sort of makes sense when there are tweets such as “With risky, diantri, and Rizky at Starbucks Coffee Big Mal”.

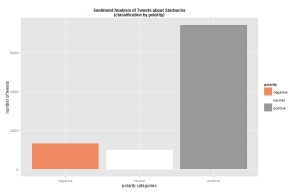

Let’s get a simpler plot, that just tells us whether the tweet is positive or negative.

# plot distribution of polarity

ggplot(sent_df, aes(x=polarity)) +

geom_bar(aes(y=..count.., fill=polarity)) +

scale_fill_brewer(palette='RdGy') +

labs(x='polarity categories', y='number of tweets') +

ggtitle('Sentiment Analysis of Tweets about Starbucks\n(classification by polarity)') +

theme(plot.title = element_text(size=12, face='bold'))

So it’s clear that most of the tweets are positive. That would explain why there are more than 21,000 Starbucks stores around the world!

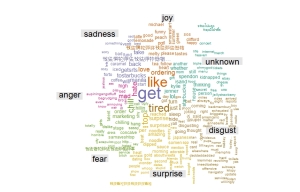

Finally, let’s look at the words in the tweets, and create a word cloud that uses the emotions of the words to determine their locations within the cloud.

# Separate the text by emotions and visualize the words with a comparison cloud

# separating text by emotion

emos = levels(factor(sent_df$emotion))

nemo = length(emos)

emo.docs = rep('', nemo)

for (i in 1:nemo)

{

tmp = some_txt[emotion == emos[i]]

emo.docs[i] = paste(tmp, collapse=' ')

}

# remove stopwords

emo.docs = removeWords(emo.docs, stopwords('english'))

# create corpus

corpus = Corpus(VectorSource(emo.docs))

tdm = TermDocumentMatrix(corpus)

tdm = as.matrix(tdm)

colnames(tdm) = emos

# comparison word cloud

comparison.cloud(tdm, colors = brewer.pal(nemo, 'Dark2'),

scale = c(3,.5), random.order = FALSE, title.size = 1.5)

Word clouds give a more intuitive feel for what people are tweeting. This can help you validate the categorical results you saw earlier.

And that’s it for this post! I hope that you can get Twitter sentiment analysis working on your computer too.

UPDATE: There’s a new package on CRAN for sentiment analysis, and I have written a tutorial about it.

Hey thanks for the post !!!!. I was trying to work with twitter package for several days.

A question I am also interested in the Rfacebook package. But the problem is that I have friends in 1000 that only shows me the information of 3 friends . Also it is known that package ?. thank you very much!!!!!

LikeLike

Hi FerLevano. Sorry but I haven’t done any work using R with Facebook.

LikeLike

hi Colin, in the first set of R script. Does “<-” mean ” <- "?

secondly when i ran the following line of code :

twitCred <- OAuthFactory$new(consumerKey = api_key, consumerSecret = api_secret, requestURL = reqURL, accessURL = accessURL, authURL = authURL)

It gives the error message below, not sure what i'm doing wrong.

Error: object 'OAuthFactory' not found

LikeLike

Hi Ken,

On the first point, yes it was “<-" but WordPress did something weird to it when I published the blog. I've now edited the script to use "=", which WordPress doesn't seem to have a problem with. Thanks for letting me know.

On the second point, OAuthFactory is inside the ROAuth package, which the script loads. Can you check whether ROAuth loaded correctly on your machine? Also check which version of ROAuth and twitteR you have loaded. You need to have the latest versions of each package, which would be 0.9.6 and 1.1.8 respectively. the way to check this is to use the sessionInfo() function. If you're still having problems, then let me know.

Colin

LikeLike

In Step 1, line 18 and 20 – These are the steps where I get error messages of ‘OAuthFactory’ not found and ‘twitCred’ not found. Any idea what I am doing wrong? I’d really like to reproduce your sentiment analysis code. Thanks

LikeLike

HI Ken,

See my comments above. Which version of R are you using? Some of this won’t work on earlier versions of R. I have tested it on R versions 3.1.3 and 3.2.0 running on a Windows PC. If you’re still having problems, then run sessionInfo() , paste the results in your comments here, and I will see whether I can figure out what is happening.

Colin

LikeLike

Hi Colin,

I was using R v3.1.2, which may explain why it didn’t work. I downloaded v3.2.1 on Mac OS 10.9 and was able to run the script in Step 3. In an earlier step, I assigned http://test.de/ as my URL, thus the web browser that opened from Step 3 did not give me a similar one as yours and therefore I’m unable to generate the PIN code to enter into R. Were you able to use http://test.de/ ? Thanks

LikeLike

Hi Ken,

I was using the exact values shown on the screen images i.e. http://test.de/ . That setting does not matter at all. Once you use the handshake command, R should either open the authorisation web page, or show you a link URL that you can paste into your web browser. What is your R doing when you execute the handshake command? What web page does it show you?

Sometimes problems may occur if your firewall blocks R from contacting the outside world, so maybe test it with your firewall switched off.

LikeLike

Hi Colin,

Please ignore the above comment, I was able to generate the PIN code. I’m now trying to install the sentiment package with no success. I will try again and hopefully get around that.

Thanks

LikeLiked by 1 person

Below is the error message from installing the sentiment package: Any ideas will be appreciated, thank you.

> if (!require(“pacman”)) install.packages(“pacman”)

> pacman::p_load(devtools)

>

> install_url(“http://cran.r-project.org/src/contrib/Archive/sentiment/sentiment_0.2.tar.gz”)

Downloading package from url: http://cran.r-project.org/src/contrib/Archive/sentiment/sentiment_0.2.tar.gz

Installing sentiment

Skipping 1 packages not available: Rstem

‘/Library/Frameworks/R.framework/Resources/bin/R’ –no-site-file –no-environ \

–no-save –no-restore CMD INSTALL \

‘/private/var/folders/5t/0086c30h8xjdvr001s6zsv780000gr/T/RtmpMfBXUS/devtools7e75cea535c/sentiment’ \

–library=’/Library/Frameworks/R.framework/Versions/3.2/Resources/library’ \

–install-tests

ERROR: dependency ‘Rstem’ is not available for package ‘sentiment’

* removing ‘/Library/Frameworks/R.framework/Versions/3.2/Resources/library/sentiment’

Error: Command failed (1)

LikeLike

Hi Ken,

Try this script before trying to install the sentiment package:

if (!require("pacman")) install.packages("pacman") pacman::p_load(devtools, installr) install.Rtools() install_url("http://cran.r-project.org/src/contrib/Archive/Rstem/Rstem_0.4-1.tar.gz")Let me know if that fixes the problem, and if so, then I will update my script to include it.

LikeLike

Hi Colin,

Yes, that script allowed me to install sentiment package – Many thanks!

In Step 5, the following script gives an error message:

setup_twitter_oauth(api_key,api_secret,access_token,access_token_secret)

[1] “Using direct authentication”

Error in check_twitter_oauth() : OAuth authentication error:

This most likely means that you have incorrectly called setup_twitter_oauth()’

The alternative also doesn’t work:

> setup_twitter_oauth(api_key,api_secret)

[1] “Using browser based authentication”

Error in loadNamespace(name) : there is no package called ‘base64enc’

LikeLiked by 1 person

Hi Ken,

Sorry, I forgot to install and load the base64enc package within the R script that I posted. I have just fixed the error. Note the change to line 2 of the script listed in Step 5.

Colin

LikeLike

Hi Colin,

That works after installing base64enc package.

I got as far as the Sentiment Analysis, last line generated an error message:

> # sort data frame

> sent_df = within(sent_df,

+ emotion = factor(emotion, levels=names(sort(table(emotion), decreasing=TRUE))))

Error in eval(expr, envir, enclos) : argument is missing, with no default

LikeLike

Hi Ken,

It turns out that I couldn’t substitute = for <- in that line of code. The correct code is:

sent_df = within(sent_df, emotion <- factor(emotion, levels=names(sort(table(emotion), decreasing=TRUE))))

LikeLike

Hi Colin,

Just to let you know that even though there was an error on the # sort data frame step above, I was able to generate similar charts but with a slight change in the ordering of the bars due to my using the unsorted “sent_df”.

I just want to thank you very much for your patience in going through the script and helping resolve the errors I encountered. The journey was worth it! I will spend some more time going through and understanding the script and try it on other data. Thank you!

Ken

LikeLiked by 1 person

Hi Ken,

I’m glad that someone went to the trouble to test out my R script and send me feedback 🙂

Colin

LikeLike

Hi Colin,

I didn’t know this pacman package (using ‘sentiment’ and ‘wordcloud’ for this kind of stuff), so thank you for pointing it out in this well written post.

By the way, one point I am not really satisfied with when using this ‘sentiment packages’ is the lack of consideration for the number of retweets.

I mean: if there are two tweets, one with ‘positive’ sentiment the other one with a ‘negative’ sentiment, and the second one received 30 retweets I think the second one should be weighted as heavier than the first when computing the total sentiment.

What’s your opinion about that?

Obviously, in order to apply this kind of reasoning you need to carry with you the ‘number of RT’ information from the result of the query to the Twitter Search API.

I would be really interested in knowing your opinion about the topic.

Thank you!

LikeLike

Hi Andrea,

I think that the best way is to include each retweet in the sentiment analysis. That way each gets a weight in the results. There’s an opportunity to improve on the sentiment package, but the problem is finding the time to create the data and build an alternative model.

Colin

LikeLike

Pingback: Efficiently Building GLMs: Part 1 | Keeping Up With The Latest Techniques

Hi Colin,

I just want to say thanks! Your tutorial is extremely helpful!

Best,

Jens

LikeLike

I’m glad you like it Jens 🙂

LikeLike

Amazing blog with so much of useful information. Thanks a lot for sharing.

Somehow I wasn’t able to get a PIN for twitter app as suggested in the first step. So I used “setup_twitter_oauth(api_key, api_secret)”.

LikeLike

Thanks Avinash.

I have also found the Twitter authorisation to be unreliable. It works on one of my computers, but not on another. So I have used a similar fall-back approach to what you suggest.

LikeLike

good information ,thank sharing!!!

LikeLiked by 1 person

Here are the error after I ran the classify_emotion coding:

class_emo = classify_emotion(some_txt, algorithm=”bayes”, prior=1.0)

Error in simple_triplet_matrix(i = i, j = j, v = as.numeric(v), nrow = length)…bla bla…..NAs introduced by coercion

LikeLike

Hi Jack,

I have noticed that sometimes it can break if there are non-standard characters in the twitter text. Can you check whether your script works on a small subset of text lines that you have manually validated by reading them?

Also, check which version you have of the R libraries.

Colin

LikeLike

im getting this error after running the wordcloud

Error in .Call(“is_overlap”, x11, y11, sw11, sh11, boxes1) :

“is_overlap” not resolved from current namespace (wordcloud)

can you help

LikeLike

I have the same problem as well…

can anyone help?

LikeLike

Hi Tokey,

This appears to be due to a recent change in the way that R resolves references to external DLLs. Please reinstall the latest version of wordcloud.

Colin

LikeLike

A big thank you Colin for this post. I was trying to work again with Twitter, but I was too lazy to deal with the new authentification process. It works now perfectly

LikeLiked by 1 person

This was very helpful. Many thanks!

LikeLiked by 1 person

This is a great posting. I am trying to replicate your’s, but the following simple error is never resolved…would you please help me?

Error: could not find function “classify_emotion”

It seems that the “sentiment” package is not installed properly…but when I run “library(sentiment)” it works properly…not library(Rstem)…

I am using R 3.2.1 and R studio…

Thanks for your advice!

LikeLike

Hi Yonghwan, You need to use the version of rstem that matches the archive of sentiment.

LikeLike

For me it was linked to the fact that Java was not installed in 64bits on my computer.

LikeLiked by 1 person

if (!require(“pacman”)) install.packages(“pacman”)

pacman::p_load(devtools, installr)

install.Rtools()

install_url(“http://cran.r-project.org/src/contrib/Archive/Rstem/Rstem_0.4-1.tar.gz”)

i tried running this script of yours but it constantly keeps giving me this error

if (!require(“pacman”)) install.packages(“pacman”)

Loading required package: pacman

> pacman::p_load(devtools, installr)

Warning in install.packages :

package ‘installr’ is not available (for R version 3.2.0)

Warning messages:

1: In library(package, lib.loc = lib.loc, character.only = TRUE, logical.return = TRUE, :

there is no package called ‘installr’

2: In pacman::p_load(devtools, installr) : Failed to install/load:

installr

> install.Rtools()

Error: could not find function “install.Rtools”

LikeLike

Hi Shabbs. You need to go to the CRAN page and download the version of Rtools that matches your system and version of R

LikeLike

Hi Shabbs, I am facing the same problem , if you get the solution then please share.

LikeLike

Very Nice summary.. Thank you so much.. could you please guide few study materials on text analysis in details ?

LikeLike

Hi Balamurugan. Unfortunately I’m not a text analysis expert. Do any of my readers have some suggestions to answer Balamurugan’s question?

LikeLike

where to actually save and run the codes

LikeLike

Hi aishwarya. This is R code. You need to install R from https://www.r-project.org/ and then you need to install a user interface. I like to use RStudio as my user interface, and you can download it from https://www.rstudio.com/

LikeLike

Hello Colin!

First of all congratulations on this tutorial! It’s very useful for my disertation!

My question:

While running the code for the plots and the wordcloud, I get the error message:

Error in .Call(“loop_apply”, as.integer(n), f, env) :

“loop_apply” not resolved from current namespace (plyr)

any ideas about what am i doing wrong?

Thanks!

Maria

LikeLike

Hi Maria,

This appears to be a versioning problem within R. See http://stackoverflow.com/questions/31352789/keep-hitting-the-error-loop-apply-not-resolved-from-current-namespace-plyr

The solution is to reinstall the latest version of the plyr package

Colin

LikeLiked by 1 person

Nice walkthrough- I’d gotten a bit out of date on how to search, and you saved me some pain testing things.

setup_twitter_oauth can be incredibly painful to diagnose why it might not be completing the 4 setting version of the dance- between network issues like proxy servers, making sure library versions match each other, and caching effects. For the last, what might help is being able to not use a httr cached version of the setup when going into a twitteR session, by

options(httr_oauth_cache=F)

setup_twitter_oauth(api_key,api_secret,access_token,access_token_secret)

alternative you can flip it to true if there is a .httr-oauth file you want to use

LikeLiked by 1 person

Thanks for the great advice David! 🙂

LikeLike

Could you please help me with 2 error messages that I get when I run the code for the plot and the wordcloud?

the error message for the plot:

Error in .Call(“loop_apply”, as.integer(n), f, env) :

“loop_apply” not resolved from current namespace (plyr)

and the error message for the wordcloud:

Error in .Call(“is_overlap”, x11, y11, sw11, sh11, boxes1) :

“is_overlap” not resolved from current namespace (wordcloud)

I would highly appreciate your reply, as I want to use for my desertation, and ofcourse I mention your website at my bibliography.

Thank you,

Maria

LikeLike

Hi Maria,

These two errors are both caused by recent changes to the way that R resolves references to external DLLs. Please reinstall the latest versions of the wordcloud and plyr packages.

Colin

LikeLike

Thank you so much!!! It worked just fine!

Maria

LikeLiked by 1 person

Hi Colin,

I have a problem while creating the handshake. Im getting Timeout when i execute

twitCred$handshake(cainfo = system.file(“CurlSSL”, “cacert.pem”, package = “RCurl”))

and i have used the following libraries

library(twitteR)

library(ROAuth)

library(RCurl)

Could you get me through this .?

LikeLike

Hi Vimal,

It might be trying to open a web browser, but your security settings may be blocking it from doing so.

Colin

LikeLike

I am also getting the timed out error. I am working on my office computer which may be preventing R to open web browser. is there a way to obtain the link without having the code to open the browser?

LikeLike

Hi Sir,

First of all Thank you.

Sir,I had gone through your given procedure,it works very nicely.

But when I close the R window,then how can I proceed to get the comments back using searchTwitter command.

LikeLike

I’m sorry but I don’t understand the question. Why you want to close R if that’s the platform you are using for your analysis?

LikeLike

Hi Sir,

Thank you for the post.

Sir,I had given through this procedure.

It works awesome.

But when I close the R window,then how can I proceed to get the comments back or how can I do my further analysis with the searchTwitter command.

LikeLike

Dear Colin,

I would like to ask your advice to solve the problem as follows.

I collected some tweets, and saved it by using the codes below for future analysis purpose.

sometweet = searchTwitter(“keywordhere”,n=2000, lang=”en”)

df=do.call(rbind, lapply(sometweet, as.data.frame))

write.csv(df, file = “1.csv”)

some_txt = sapply(sometweet, function(x) x$getText())

write.csv(some_txt, file = “2.csv”)

So, I have two data sets, which are CSV format.

Now, it is time to do sentiment analysis by using the saved data. However, the results showed nothing. I think I missed something when I import the data set. I used the following simple codes to import the data to my Rstudio.

some_txt=read.csv(file=”2.csv”)

Thank you for your time and advice.

LikeLike

Hi Yonghwan,

Does your new search contain any text?

Does 2.csv contain any text?

Remember to set row.names = FALSE when using write.csv

I note from your sample code that some_txt is originally an array, but that you read it back as a data.frame. These are different data types. You need to use consistent data types.

Colin

LikeLike

Dear Colin,

Thanks for your advice.

The “2.csv” file contains texts.

I haven’t added “row.names = FALSE” when I save the file.

If you do not mind, would you please give specific R codes to successfully import the data set in order to proceed sentiment analysis?

Thank you for your help in advance.

LikeLike

Yonghwan,

Don’t save as text files. Instead use the save and load functions to save the R object. https://stat.ethz.ch/R-manual/R-devel/library/base/html/save.html

Colin

LikeLike

Hello Sir, Thank you for this amazing tutorial. It help me a lot.

Sir, I would like to ask you about something. My teacher asked me to make a sentiment analysis about some brand, and the tweets from this brand account must not include in the analysis. what should I do, sir?

LikeLike

You need to search for tweets from other accounts that reference the brand e.g. search tweets for “Pepsi” but exclude tweets written by the official Pepsi tweet account

LikeLike

what script should I use to exclude the official account, sir?

LikeLike

I think that your teacher wants you to figure that out for yourself instead of getting someone else to do your homework for you. A good place to start would be to understand the syntax of a twitter search, by reading https://dev.twitter.com/rest/public/search

You could also check the sender of each twitter message within your search results, and skipping over those from the official account.

LikeLike

yes, sir.. thank you for your help 🙂

I hope you make another tutorial to help beginner like me to understand more about text mining. Thanks for the link.

LikeLiked by 1 person

Hi,

Great Post.I have followed this post to try and understand sentiment analysis on Twitter using R,its been very helpful.I am getting an error on executing:

class_emo = classify_emotion(some_txt, algorithm=”bayes”, prior=1.0)

The error is as follows:

Error in simple_triplet_matrix(i = i, j = j, v = as.numeric(v), nrow = length(allTerms), :

‘i, j, v’ different lengths

In addition: Warning messages:

1: In mclapply(unname(content(x)), termFreq, control) :

all scheduled cores encountered errors in user code

2: In simple_triplet_matrix(i = i, j = j, v = as.numeric(v), nrow = length(allTerms), :

NAs introduced by coercion

Any suggestions to rectify this will be greatly helpful.

Thank you

LikeLike

Hi Mahavir,

I haven’t seen that error before. So I’d suggest that you:

1) check that you have the latest version of each of the R packages

2) check that you have valid text data from your Twitter search

3) check that you have an up to date version of Java installed

Colin

LikeLike

Great post! Is there any way to test the accuracy of the classification? or any other validity and reliability tests?

LikeLike

For example, mean precisions, accuracy, recall, or F-values…is it available to test? It yes, would you please share R examples that are directly applicable to your post?

Thanks very much for your advice and help,

LikeLike

Hi Yonghwan,

To test the accuracy you would need a source of truth. What is your source of truth for classifying twitter feeds?

Colin

LikeLike

Thank u soo much . But , I am getting erros in ggplot2() and also after giving the command

setup_twitter_oauth(api_key,api_secret)

it is not opening the browser, rather giving the following error..

pls help me …

[1] “Using browser based authentication”

Error in curl::curl_fetch_memory(url, handle = handle) :

Couldn’t resolve host name

LikeLike

Hi Ravi,

That sounds like your firewall might be blocking the call, or you aren’t connected to the internet. Or have you changed script to use a different url or different twitter feed?

Colin

LikeLike

Thanks for your response for my question about precisions, accuracy, recall, or F-values to test the reliability and validity of the emotion classification of tweets.

However, due to the lack of my experiences on this, it is little hard to understand about your comment, “a source of truth.”

Instead, would it be possible to show an example testing R codes that are directly applicable to your Starbucks post? It would be greatly helpful to understand as well as to apply to my own analysis.

Thanks very much for this learning opportunity!

Best regards,

LikeLike

Yonghwan,

What I mean is that you can’t measure the accuracy unless you objectively know what the correct answers are. I don’t know the correct answers for Twitter sentiment scoring.

Colin

LikeLike

Hey your post is amazing. It helped me a lot.I was trying to work with twitter sentiment analysis for several day n i was unable to implement it.

But after following the steps in your post i have successfully implemented it.

Thanks,

shweta

LikeLike

Thank you a lot!!

I was having problems with codes from the book that I bought about R

The book was expensive that had no sufficient explanation

Moreover, OAuthFactory function didn’t work at all

However, your code came up to me like a light in the darkness

Everything works well, and I will need to look the code more into details so that I can code it myself

Anyway, thanks a lot

LikeLiked by 1 person

Thanks much for the code. It simply works.

LikeLike

I get this error also

Error in simple_triplet_matrix(i = i, j = j, v = as.numeric(v), nrow = length(allTerms), :

‘i, j, v’ different lengths

In addition: Warning messages:

1: In mclapply(unname(content(x)), termFreq, control) :

all scheduled cores encountered errors in user code

2: In simple_triplet_matrix(i = i, j = j, v = as.numeric(v), nrow = length(allTerms), :

NAs introduced by coercion

Checked all 3

1) check that you have the latest version of each of the R packages

2) check that you have valid text data from your Twitter search

3) check that you have an up to date version of Java installed

LikeLike

Hi David,

I’m not the author of that R package, so I’m not the best person to tell you why that error occurs. But I suggest that you run that line of code against just a few lines of text at a time until the error occurs, then look at the text that causes the error.

Colin

LikeLike

Thanks Colin will do. Appreciate your help

LikeLike

Hi Colin,

Great post, indeed!

I keep getting this error:

Error in setup_twitter_oauth(api_key, api_secret, access_token, access_token_secret) :

could not find function “oauth_app”

Any insights on this?

Thanks!

LikeLike

Hi Daniel,

From what I’ve read on stack exchange, you may have to update your version of the httr package.

Colin

LikeLike

Hi Collin,

I have to say this is one of the best sentiment analysis tutorial out there, especially on installation of sentiment package to the latest R packages. Thanx a bunch.

I wish you could explain to a beginner the use of gsub and also the tolower function code.

Manju.

LikeLike

Hi Manju,

gsub is quite easy to use and very fast. It finds every instance of a substring matching your search, and then substitutes another substring. For straightforward substitutions, like replacing “dog” with “cat” the syntax is easy. What’s more complicated is if you want to use regular expressions inside gsub. I’m not much of an expert on regular expressions 😦 so I google for solutions on stack overflow.

The tolower function changes all of the text to lower case e.g. “Colin” becomes “colin”. This can be useful in text mining when you want to treat all capitalisation variants of a word as the same.

LikeLike

Hi Colin,

Thanks a ton for this post! Works great. I’m in my learning stage, and I just wanted to check if there is a way to change the emotion types by any chance.

LikeLike

Hi,

The script uses a pre-built sentiment model. To identify other emotions you would need to train your own model. What emotions would you like to identify? Maybe I could write a blog about how to create and run a custom sentiment model.

Colin

LikeLike

Hello Collin,

I keep getting the error – Error: could not find function “classify_emotion”. Despite loading sentiment & Rstem packages. I am using R 3.3.1. Can you pls suggest.

LikeLike

Hi Gaurav,

Sometimes package installations get corrupted. I suggest that you reinstall the sentiment package.

Colin

P.S. My name is “Colin” not “Collin”

LikeLike

I am getting an error : Error: could not find function “classify_emotion” after the step “class_emo = classify_emotion(some_txt, algorithm=’bayes’, prior=1.0)”. Please suggest a solution.

LikeLike

Hi Gaurav,

It sounds like the sentiment package has not been installed correctly, or maybe not loaded. You suggest that you reinstall the sentiment package.

Colin

LikeLike

Hi Colin,

I keep receiving the error:

Downloading package from url: http://cran.r-project.org/src/contrib/Archive/Rstem/Rstem_0.4-1.tar.gz

Error in loadNamespace(name) : there is no package called ‘curl’

This occurs during the 4th line of step 4.

Any ideas?

Thank you

LikeLike

Hi,

It looks like some of the R packages now use the “curl” package internally, and this is a breaking change. I just saw this same error in another project I have been working on.

The solution is to install the curl package. You can do this using the following command:

install.packages(“curl”, repos = “http://cran.us.r-project.org”)

Colin

LikeLike

Hi Colin, thanks for your stellar blog post, it was so helpful to map out the process from beginning to end. Ive successfully run through the whole process which was really rewarding. There was one tip I thought Id mention, which I had to source the answer to and that was that I found I need to convert the corpus of text into UTF-8 after the removing of punctuation step, otherwise it was throwing an error in the sentiment analysis process.

some_txt <- iconv(some_txt, to = "utf-8")

I was wondering what you would recommend if you wanted to process each tweet individually into a separate column to identify the tweet's sentiment one row at a time?

LikeLike

Hi Sarah,

Thanks for the tip. It’s definitely useful.

Since I’m based in Asia, I’ve increasing had to help people who’s R text mining scripts don’t work, and it ends up being encoding issues. Many users in Asia have their computers set up for other languages e.g. Chinese. I’ve also had encoding problems arise when moving data and scripts between Windows and Linux environments.

Colin

LikeLike

Yes, that conversion to UTF solved the problem that David Alexander mentioned on May 7 about the triplet matrix.

LikeLiked by 1 person

Actually, don’t worry about my question about individual classification of text rows, I just realised that was completed in the sent_df step, that’s super.

LikeLike

getting this message while generating the pin

Example Domain

This domain is established to be used for illustrative examples in documents. You may use this domain in examples without prior coordination or asking for permission.

please help in rectifying the error

LikeLike

Hi Omkar,

Sorry I’ve never seen this error before, but it may be related to the use of http://test.de/

Maybe you should try an alternative url instead of http://test.de/

Colin

LikeLike

while installing sentiment package getting error

* installing *source* package ‘sentiment’ …

** package ‘sentiment’ successfully unpacked and MD5 sums checked

** R

** data

** preparing package for lazy loading

Error : package ‘NLP’ required by ‘tm’ could not be found

ERROR: lazy loading failed for package ‘sentiment’

* removing ‘C:/Users/omkar/Documents/R/win-library/3.3/sentiment’

Error: Command failed (1)

LikeLike

Hi Omkar,

I have seen this error sometimes. The sentiment package needs the tm package to be installed first. If all were working OK, then this would happen automatically, but sometimes it does not, maybe because the sentiment package has been discontinued. So the solution is to manually install the tm package before running the script.

Colin

LikeLike

Hi Colin.

I am using Rstudio Version 0.99.902

I am unable to install Sentiment package – I receive following error:

package ‘http://cran.r-project.org/src/contrib/Archive/sentiment/sentiment_0.2.tar.gz’ is not available (for R version 3.3.1)

Please advise on a best solution to rectify this.

Thank you

LikeLike

Hi Segz,

Run the following two lines to ensure that you have the pre-requisites:

pacman::p_load(NLP, tm)

install.Rtools()

The Rtools installation is interactive. Do not run it as part of a batch of multiple lines of R script. Once you have successfully run these lines, then you can run the following line:

install_url(‘http://cran.r-project.org/src/contrib/Archive/sentiment/sentiment_0.2.tar.gz’)

Today I have successfully installed the sentiment package on R version 3.3.1 🙂

Colin

LikeLike